User:Jcreer/SMS:Toolbox: Difference between revisions

| (80 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

__NOINDEX__ | __NOINDEX__ | ||

Starting with version 13.2 , SMS includes a general purpose toolbox that allows the SMS process to interact with external python scripts. In SMS 13.2 this is a beta feature. For this version, the user accesses the toolbox by | __TOC__ | ||

Starting with version 13.2 , SMS includes a general purpose toolbox that allows the SMS process to interact with external python scripts. In SMS 13.2 this is a beta feature. For this version, the user accesses the toolbox by selecting the toolbox icon [[File:Toolbox macro.png|16px]] in the macro toolbar. This bring up the SMS toolbox dialog. | |||

==SMS Toolbox== | ==SMS Toolbox== | ||

Tools in the toolbox allow the user to operate on data in an SMS interactive session as well as data in external files. Each tool in the toolbox links to a python script that produces a specific output. Example output from a tool could include, but are not limited to: | Tools in the toolbox allow the user to operate on data in an SMS interactive session as well as data in external files. Each tool in the toolbox links to a python script that produces a specific output. Launch a tool by either double clicking on the tool or selecting the tool and clicking the "Run Tool..." button at the bottom of the toolbox. | ||

Example output from a tool could include, but are not limited to: | |||

* a graphic or plot. | * a graphic or plot. | ||

* a report (document or spreadsheet) for a specified analytical process. | * a report (document or spreadsheet) for a specified analytical process. | ||

* a new geometry item in SMS. | * a new geometry item in SMS (i.e. a new mesh/Ugrid). | ||

* a new dataset in SMS. | * a new dataset in SMS. | ||

* a new data file. | * a new data file. | ||

| Line 12: | Line 15: | ||

Available tools in the SMS Tool dialog are divided into categories, typically based on the type of output or the type of arguments used in the tool. Currently these categories include: | Available tools in the SMS Tool dialog are divided into categories, typically based on the type of output or the type of arguments used in the tool. Currently these categories include: | ||

* ''ADCIRC'' – Contains tools for modifying ADCIRC meshes and boundary conditions to honor externally specified levee elevation data. (Note: requires a license to the ADCIRC interface.) | |||

* ''Coverages'' – Contains tools for manipulating and creating coverages. | |||

* ''Datasets'' – Contains tools for manipulating datasets attached meshes, scatters sets, or Ugrids. | * ''Datasets'' – Contains tools for manipulating datasets attached meshes, scatters sets, or Ugrids. | ||

* ''Lidar'' – Contains tools for lidar point clouds. | |||

* ''Rasters'' – Contains tools for manipulating raster files in the GIS module. | * ''Rasters'' – Contains tools for manipulating raster files in the GIS module. | ||

* '' | * ''Unstructured Grids'' – Contains tools for manipulating UGrids, scatter sets, and meshes. (Note: all of these geometries can be operated on as UGrids in python scripts.) | ||

<!-- | <!-- | ||

(Jeff - eventually we want a wiki article for each tool in the toolbox that is created or managed by Aquaveo. These tools should be listed in an expandable list for each category and include a link to the tool's wiki article.)--> | (Jeff - eventually we want a wiki article for each tool in the toolbox that is created or managed by Aquaveo. These tools should be listed in an expandable list for each category and include a link to the tool's wiki article.)--> | ||

| Line 25: | Line 31: | ||

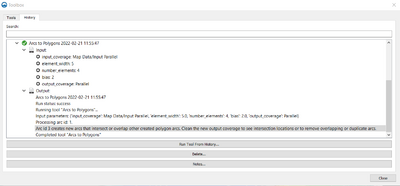

===History Tab=== | ===History Tab=== | ||

The toolbox maintains a history of all tools invoked for | The toolbox maintains a history of all tools invoked for in an SMS project. | ||

The history section includes the tool and the date/time the tool was invoked. Selecting an entry in the history tab launches the listed tool. The input fields are populated with the arguments that were used in the previous invocation of the tool. These inputs can be changed for the new invocation of the tool. | |||

The ''History'' tab has the following options: | |||

*'''Run Tool From History''' – Clicking this will run the tool again using the same parameters. | |||

*'''Delete''' – Removes the run from the history. | |||

*'''Notes''' – Brings up the [[GMS:Notes|Notes]] dialog which allows notes to be attached to the run. | |||

==ADCIRC Tools== | |||

{{Check/Fix Levee Crest Elevations}} | |||

{{Check/Fix Levee Ground Elevations}} | |||

==Coverages Tools== | |||

{{Arcs to Polygons}} | |||

{{Trim Coverage}} | |||

{{UGrid Boundary to Polygons}} | |||

==Dataset Tools== | ==Dataset Tools== | ||

{{Advective Courant Number}} | |||

{{Advective Time Step}} | |||

{{Angle Convention}} | |||

{{Canopy Coefficient}} | |||

{{Chezy Friction}} | |||

{{Compare Datasets}} | |||

{{ | {{Directional Roughness}} | ||

{{Filter Dataset Values}} | |||

{{Geometry Gradient}} | |||

{{Gravity Waves Courant Number}} | |||

{{Gravity Waves Time Step}} | |||

{{Landuse Raster to Mannings N}} | |||

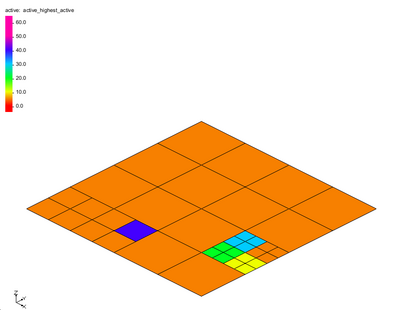

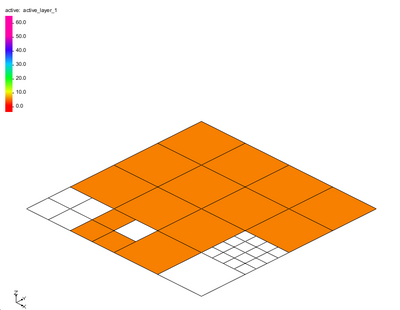

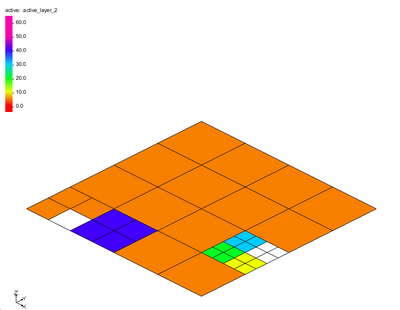

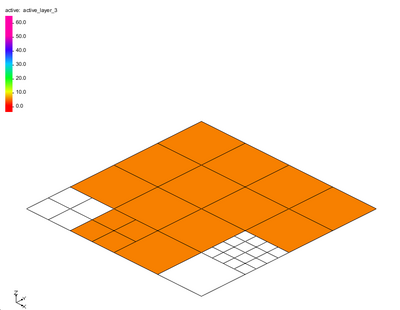

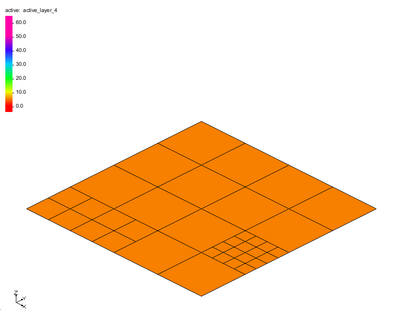

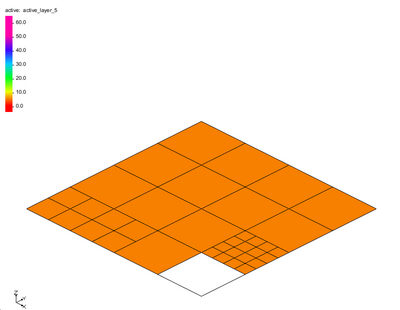

{{Map Activity}} | |||

{{Merge Datasets}} | |||

{{Point Spacing}} | |||

{{Primitive Weighting}} | |||

{{Quadratic Friction}} | |||

{{ | |||

{{Sample Time Steps}} | |||

{{ | |||

{{Scalar to Vector}} | |||

{{ | |||

{{Smooth Datasets}} | |||

{{ | |||

{{Smooth Datasets by Neighbor}} | |||

{{ | |||

{{Time Derivative}} | |||

{{ | |||

{{Vector to Scalar}} | |||

{{ | |||

=== | ==Lidar Tools== | ||

{{LAS to LAS}} | |||

{{ | |||

{{LASToText}} | |||

{{LASIndex}} | |||

{{LASInfo}} | |||

{{LASMerge}} | |||

{{ | {{LASZip}} | ||

{{Text to LAS}} | |||

==Raster Tools== | ==Raster Tools== | ||

{{Blend Raster to Edges}} | {{Blend Raster to Edges}} | ||

{{Bounds to Polygon}} | |||

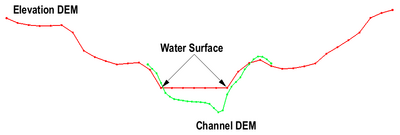

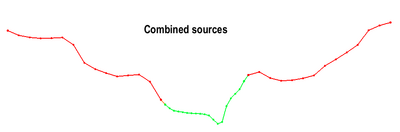

{{Clip Raster from Elevation}} | {{Clip Raster from Elevation}} | ||

{{Edit Elevations}} | |||

{{Extend Raster}} | |||

{{Fill Nodata}} | |||

{{Interpolate Priority Rasters}} | |||

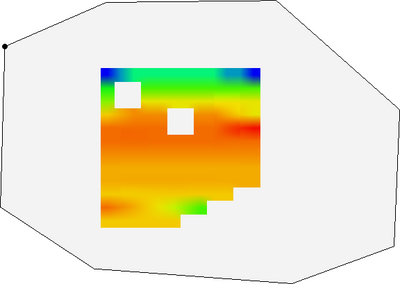

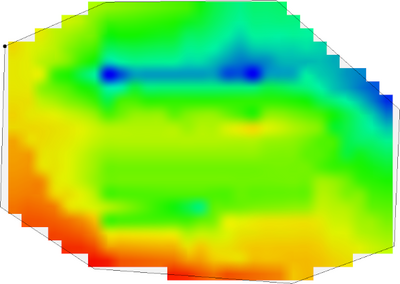

{{Merge Elevation Rasters}} | |||

{{Nodata to Polygon}} | |||

{{Raster Difference}} | |||

{{ | |||

{{Trim Raster}} | |||

{{UGrid to Raster}} | |||

==Unstructured Grids Tools== | |||

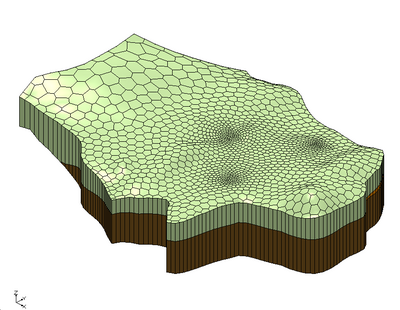

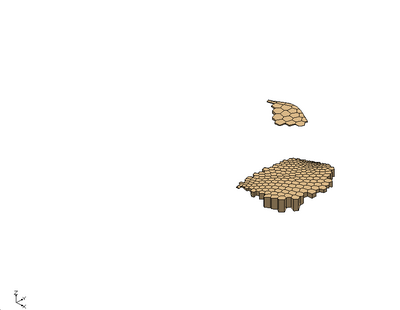

{{Convert to Voronoi UGrid}} | |||

{{Convert 3D Data to 2D Data}} | |||

{{Convert Mesh/Scatter/Cartesian Grid to UGrid}} | |||

{{Convert to 2D Mesh}} | |||

{{Create Bridge Footprint}} | |||

{{Export Curvilinear Grid}} | |||

{{Extrude to 3D UGrid}} | |||

{{Import Curvilinear Grid}} | |||

{{Import UGrid Points}} | |||

{{Interpolate to UGrid}} | |||

{{Linear Interpolate to UGrid}} | |||

{{Map Activity to UGrid}} | |||

{{ | |||

{{Merge UGrids}} | |||

{{ | |||

{{Smooth UGrid}} | |||

{{ | |||

{{Lloyd Optimizer}} | |||

{{ | |||

[[Category:Tools]] | |||

Latest revision as of 20:45, 30 June 2022

Starting with version 13.2 , SMS includes a general purpose toolbox that allows the SMS process to interact with external python scripts. In SMS 13.2 this is a beta feature. For this version, the user accesses the toolbox by selecting the toolbox icon ![]() in the macro toolbar. This bring up the SMS toolbox dialog.

in the macro toolbar. This bring up the SMS toolbox dialog.

SMS Toolbox

Tools in the toolbox allow the user to operate on data in an SMS interactive session as well as data in external files. Each tool in the toolbox links to a python script that produces a specific output. Launch a tool by either double clicking on the tool or selecting the tool and clicking the "Run Tool..." button at the bottom of the toolbox.

Example output from a tool could include, but are not limited to:

- a graphic or plot.

- a report (document or spreadsheet) for a specified analytical process.

- a new geometry item in SMS (i.e. a new mesh/Ugrid).

- a new dataset in SMS.

- a new data file.

Tools Tab

Available tools in the SMS Tool dialog are divided into categories, typically based on the type of output or the type of arguments used in the tool. Currently these categories include:

- ADCIRC – Contains tools for modifying ADCIRC meshes and boundary conditions to honor externally specified levee elevation data. (Note: requires a license to the ADCIRC interface.)

- Coverages – Contains tools for manipulating and creating coverages.

- Datasets – Contains tools for manipulating datasets attached meshes, scatters sets, or Ugrids.

- Lidar – Contains tools for lidar point clouds.

- Rasters – Contains tools for manipulating raster files in the GIS module.

- Unstructured Grids – Contains tools for manipulating UGrids, scatter sets, and meshes. (Note: all of these geometries can be operated on as UGrids in python scripts.)

History Tab

The toolbox maintains a history of all tools invoked for in an SMS project.

The history section includes the tool and the date/time the tool was invoked. Selecting an entry in the history tab launches the listed tool. The input fields are populated with the arguments that were used in the previous invocation of the tool. These inputs can be changed for the new invocation of the tool.

The History tab has the following options:

- Run Tool From History – Clicking this will run the tool again using the same parameters.

- Delete – Removes the run from the history.

- Notes – Brings up the Notes dialog which allows notes to be attached to the run.

ADCIRC Tools

Check/Fix Levee Crest Elevations

The Check/Fix Levee Crest Elevations tool checks and adjusts the Z Crest attributes of ADCIRC levee boundaries (in an ADCIRC BC coverage) against a set of elevation lines (check lines) contained in specified Map module coverage.

Input Parameters

- Input ADCIRC Boundary Conditions coverage – Coverage containing the arcs which represent the levees to be checked. A pair of arcs are associated with a levee crest curve.

- Note:' When an ADCIRC simulation is loaded from model native files a set of mapped boundary conditions is created on the simulation. This must be converted to a coverage (right-click on the mapped boundary condition object) to create the arcs that are used in this tool.

- Domain Grid – The 2DMesh of the ADCIRC domain.

- Note: The tool will actually work with a UGrid or a 2DMesh. ADCIRC simulations currently take 2DMesh objects.

- Input check geometry coverage – Coverage containing the arcs which define the "correct" elevation. This may be a coverage of any type. Typically this coverage comes from a shapefile or CAD file of measured or designed levee crests.

- TauZ (allowable levee elevation error threshold) – This is the allowable error between the crest elevation defined in the ADCIRC BC file and the check elevation. Typically this would be 0.0.

Output Parameters

- Output coverage – The name to assign the output ADCIRC Boundary Conditions coverage with adjusted levee crests.

Operation

The tool performs a check on each selected levee arc or all levee arcs in the coverage if there are no selected arcs. For each levee arc pair the tool does the following:

- Maps or snaps the levee arcs to the 2D mesh to get the levee node pairs.

- Identifies "check lines" from the check coverage that apply to this levee using segments created between each levee mesh node pair.

- Ensures that only one check line exists. Multiple check lines for any node pair results in an error message because the action to take becomes ambiguous.

- Extracts the defined crest elevation for each node pair.

- Extracts one (and only one) check elevation for each node pair. Existence of multiple check elevations results in an error message.

- Adjusts the crest elevation at the parametric value of the node pair if it does not match (within the specified threshold). Variation by more than the specified threshold results in notification that the levee crest is being adjusted.

- Performs validity checks for the levee.

- Invalid levee definition: Ensures that the levee definition is valid with the selected 2D mesh. This is based on the mapping operation. A level is invalid if it does not line up with a “hole” in the mesh for that levee or if the number of nodes on opposite sides of that hole is not consistent. Invalid levees are not deleted from the boundary condition file. They are left unchanged (i.e. the crest elevations of the invalid levee will be the same as in the input Boundary Conditions coverage.) If this check fails, no other checks are performed.

- Node(s) in multiple levees: Checks for the usage of any node in the levee in another levee. If a mesh node appears in more than one levee definition after snapping the input levee boundaries to the domain mesh, this global warning will be reported. While this is a legal and common condition, the behavior of ADCIRC at these nodes is not well defined.

- No check line: If an input levee boundary does not have at least one valid intersection between its node pair segments and the input check geometry, this error will be reported. The crest elevations for the levee in the output coverage will be the same as the input. Note that a trivial reject check is performed on each levee. If the extents of an input levee pair are completely outside the bounds of the input check geometry, the levee is excluded from the results report. The crest elevations of the levee will not be adjusted in the output coverage and a global warning will be reported.

- Gaps in check line or incomplete check line: If there are one or more node pair segments on an input levee boundary that do not intersect with the input check geometry coverage, this warning will be reported. The crest elevations of these levee node pairs will not be adjusted in the output coverage. The crest elevations of all other node pairs on the levee that do intersect with the check geometry will be adjusted if needed. This condition can occur if there is a gap in the input check coverage feature arcs or the input check feature arcs do not extend through an entire levee.

- Multiple check line intersection(s): If any levee node pair segment has more than one intersection with the check geometry and the delta Z for any of those intersections is greater than the input TauZ, this error will be reported and the crest elevation at this node pair will not be adjusted in the output coverage. All other node pair segments in the levee that have a valid intersection will be checked and adjusted as needed.

- Intersection(s) required buffering: If a levee node pair segment does not intersect with the input check geometry, a second attempt is made with a buffered segment that is created by extending the original segment on both sides by a length that is equal to the levee width at that node pair. If a valid intersection is made with the buffered segment, it will be treated as a valid intersection for the purposes of checking and adjusting the output crest elevation, but this warning will be reported for the levee node pair.

- Partially unused check line: If a check line extends beyond the first or last node pair by a distance that is greater than 1.5 times the distance between the first/last node pair and its adjacent node pair, this warning will be reported. All node pairs on the levee will be checked and adjusted as normal.

- Potential units mismatch: If either the defined levee crest elevation or the check geometry elevation is greater than the other elevation by at least a factor of three, this warning will be reported for the levee. Note that the warning will only be reported once per levee. This is an indication of a feet/meter vertical units mismatch between the input Boundary Conditions coverage and the input check geometry coverage. If this is detected, the plots will show a difference in elevations and the results of the tool should be rejected. The user would then correct the units of one of the coverages to be consistent and run the tool again.

Once the calculations are complete the tool brings up a plot/report window as described below. After reviewing the data/changes generated by the tool, the user may accept them by clicking the OK button or reject them using the Cancel button. Clicking OK results in the creation of the new ADCIRC boundary condition coverage (with edited crest elevations) if any edits were needed.

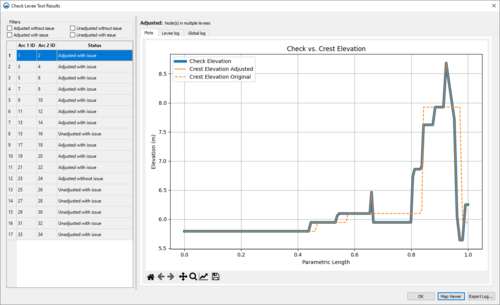

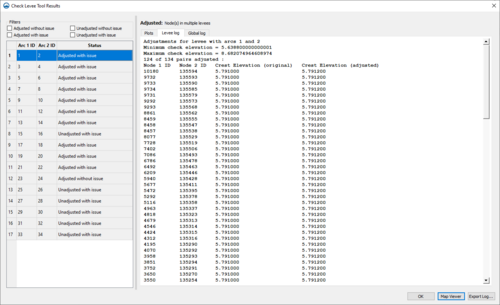

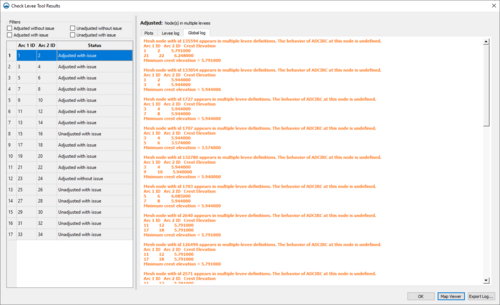

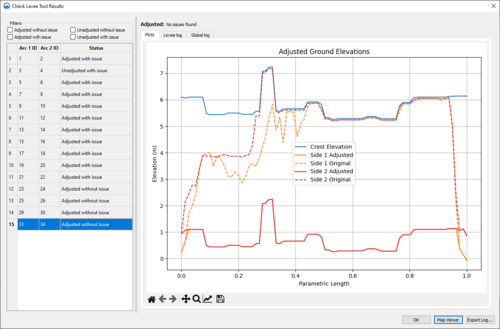

Reviewing Results

After the checks are complete a plot/results window appears if there were any adjustments made. The report window contains a table where each row represents a levee pair. In a pane to the right of the table, a plot is displayed for the currently selected levee. The x-axis is parametric distance along the arc and the y-axis is elevation. Curves are drawn for the check elevation, the original levee crest elevation, and the adjusted levee crest elevation.

The right side pane also contains tabs for viewing log output for the currently selected levee and global level log messages.

The levees shown in the table and drawn in the map viewer can be filtered based on the four possible check statuses. The check status and a short description of the detected issues is displayed in a label on the right side pane.

- Adjusted: Nodes (s) in multiple levees, gaps in check line or incomplete check line.

- Adjusted without issue: Indicates that the crest elevations for the levee did need adjusting but no other issues were detected.

- Adjusted with issue: Indicates that the crest elevations for the levee did need adjusting and one or more other issues were detected. See the Validity checks section for the possible issues that may be detected.

- Unadjusted without issue: Indicates that the crest elevations for the levee did not need adjusting and no other issues were detected.

- Unadjusted with issue: Indicates that the crest elevations for the levee were not adjusted but one or more other issues were detected. See the Validity checks section for the possible issues that may be detected.

Clicking the OK button closes both results dialog and returns the user to the tool runner dialog where they can either click OK to accept the adjustments and create the new Boundary Conditions coverage in SMS or “Cancel” to reject the adjustments.

Clicking the Map Viewer button will bring the map viewer dialog to the front as it is a modeless dialog that can be hidden.

Clicking the Export Log... button will prompt the user for filename and write the global and levee-specific logs to the selected file.

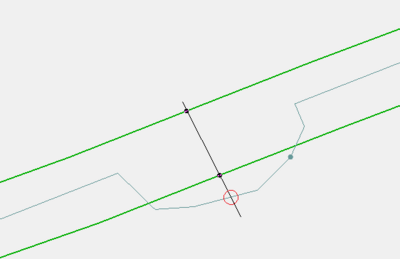

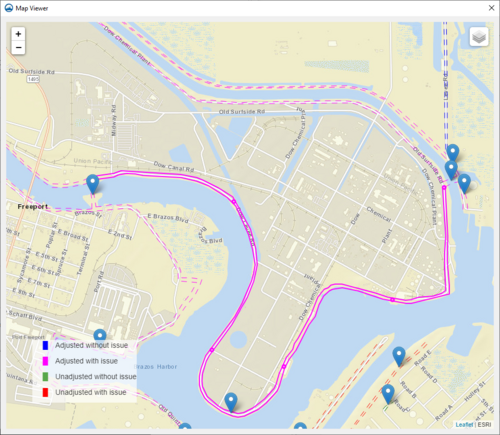

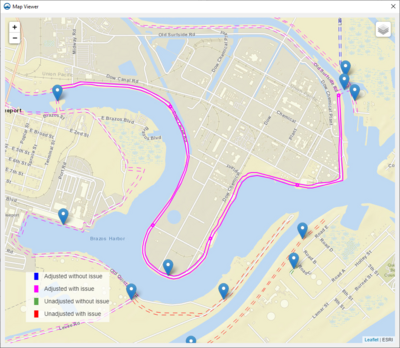

Map Viewer

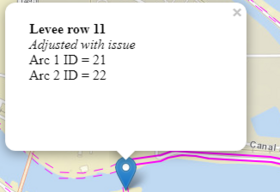

The Map Viewer contains a map view of all the levee arcs currently being displayed in the levee results table. The currently selected levee pair in the levee results table is drawn with solid lines in the map viewer and all other levees are drawn with dashed lines. Point markers are drawn in the middle of the levee at 20% intervals that match the parametric length ticks on the x-axis of the levee result plot.

A marker is displayed in the middle of each levee that displays a short description of the levee pair when clicked on.

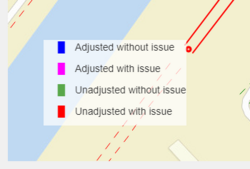

The color of the levee arcs is determined by check status.

- Adjusted without issue: Blue

- Adjusted with issue: Magenta

- Unadjusted without issue: Green

- Unadjusted with issue: Red

The zoom level can be manually adjusted with the control in the top left of the dialog.

The layer control in the top right of the dialog can be used to switch between the “ESRI Street Map” and “ESRI World Imagery” background map layers.

The bottom left corner of the dialog contains a legend for the possible levee check status colors.

Current Location in Toolbox

ADCIRC|Check/Fix Levee Crest Elevations

Related Tools

Check/Fix Levee Ground Elevations

The Check/Fix Levee Ground Elevations tool checks and lowers, if needed, the elevations of an ADCIRC domain based on the crest elevations defined in an ADCIRC Boundary Conditions coverage. ADCIRC requires that the ground elevation specified for the nodes on either side of a levee structure be lower than the crest elevation of the levee. Failure to meet this condition results in a model run failure. This tool creates a new dataset that can be mapped as the elevation for the 2D mesh to ensure compliance with the levee crest requirement.

Input Parameters

- Input ADCIRC Boundary Conditions coverage – Coverage containing the arcs which represent the levees to be checked. A pair of arcs are associated with a levee crest curve.

- Note: When an ADCIRC simulation is loaded from model native files a set of mapped boundary conditions is created on the simulation. This must be converted to a coverage (right-click on the mapped boundary condition object) to create the arcs that are used in this tool.

- Domain Grid – The 2DMesh of the ADCIRC domain. (Note: The tool will actually work with a UGrid or a 2DMesh. ADCIRC simulations currently take 2DMesh objects.)

- Minimum height of crest elevation above ground – This is the user specified minimum difference between the crest elevation defined in the ADCIRC BC file and the mesh elevation. Strictly speaking, this just needs to be a positive value.

Output Parameters

- Output dataset – The name to assign the output dataset which can be mapped as the mesh elevation to comply with ADCIRC elevation requirement.

- Output difference dataset – The name to assign the output dataset which can be displayed to visualize the difference imposed by the tool.

Operation

The tool performs a check on each selected levee arc or all levee arcs in the coverage if there are no selected arcs. For each levee arc pair the tool does the following:

- Maps or snaps the levee arcs to the 2D mesh to get the levee node pairs.

- Extracts the elevations for both nodes in the node pair.

- Extracts the defined crest elevation for each node pair.

- Adjusts the elevation for either node in the pair (or both if they are both invalid) whose elevation is not below the defined crest elevation by the specified minimum difference.

- Performs validity checks for the levee.

- Invalid levee definition: Ensures that the levee definition is valid with the selected 2D mesh. This is based on the mapping operation. A level is invalid if it does not line up with a "hole" in the mesh for that levee or if the number of nodes on opposite sides of that hole is not consistent. Invalid levees are not deleted from the boundary condition file. They are left unchanged (i.e. the crest elevations of the invalid levee will be the same as in the input Boundary Conditions coverage.) If this check fails, no other checks are performed.

- Node(s) in multiple levees: Checks for the usage of any node in the levee in another levee. If a mesh node appears in more than one levee definition after snapping the input levee boundaries to the domain mesh, this global warning will be reported. While this is a legal and common condition, the behavior of ADCIRC at these nodes is not well defined.

If the user chooses to accept the adjustments, the two output datasets will be loaded onto the input domain mesh in SMS. The user may then use the Data → Map Elevation… command to set the adjusted elevation dataset as the Z dataset of the mesh. (Note: if no adjustments were made, no datasets will be created.)

Reviewing Results

After the checks are complete a plot/results window appears if there were any adjustments made. The report window contains a table where each row represents a levee pair. In a pane to the right of the table, a plot is displayed for the currently selected levee. The x-axis is parametric distance along the arc and the y-axis is elevation. Curves are drawn for the levee crest elevation (as defined in the BC coverage) and the geometry from the mesh on either side of the levee both before and after adjustment. Adjusted elevations are plotted as solid lines while original elevations are plotted as dashed lines.

The levees shown in the table and drawn in the map viewer can be filtered based on the four possible check statuses. The check status and a short description of the detected issues is displayed in a label on the right side pane.

- Adjusted: Nodes (s) in multiple levees, gaps in check line or incomplete check line.

- Adjusted without issue: Indicates that the crest elevations for the levee did need adjusting but no other issues were detected.

- Adjusted with issue: Indicates that the crest elevations for the levee did need adjusting and one or more other issues were detected. See the “Validity checks” section for the possible issues that may be detected.

- Unadjusted without issue: Indicates that the crest elevations for the levee did not need adjusting and no other issues were detected.

- Unadjusted with issue: Indicates that the crest elevations for the levee were not adjusted but one or more other issues were detected. See the “Validity checks” section for the possible issues that may be detected.

Clicking the OK button closes both results dialog and returns the user to the tool runner dialog where they can either click OK to accept the adjustments and create the new Boundary Conditions coverage in SMS or Cancel to reject the adjustments.

Clicking the Map Viewer button will bring the map viewer dialog to the front as it is a modeless dialog that can be hidden.

Clicking the Export Log... button will prompt the user for filename and write the global and levee-specific logs to the selected file.

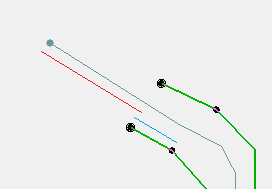

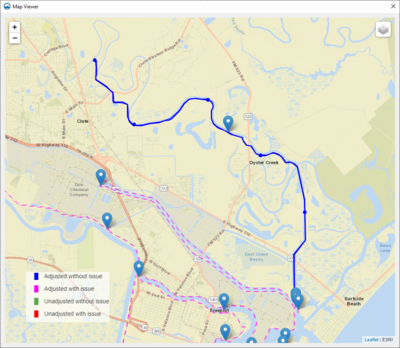

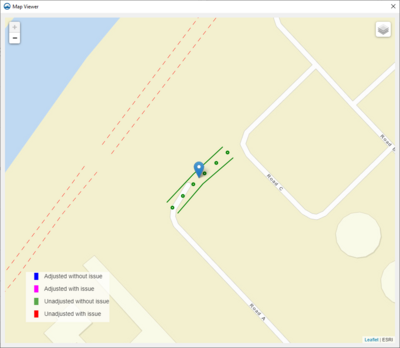

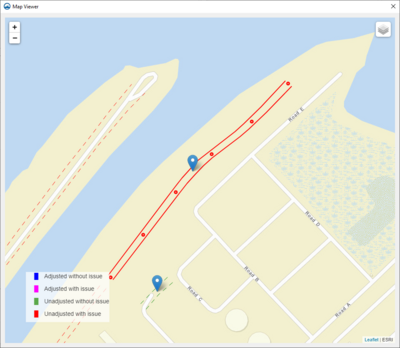

Map Viewer

The Map Viewer contains a map view of all the levee arcs currently being displayed in the levee results table. The currently selected levee pair in the levee results table is drawn with solid lines in the map viewer and all other levees are drawn with dashed lines. Point markers are drawn in the middle of the levee at 20% intervals that match the parametric length ticks on the x-axis of the levee result plot.

A marker is displayed in the middle of each levee that displays a short description of the levee pair when clicked on.

The color of the levee arcs is determined by check status.

- Adjusted without issue: Blue

- Adjusted with issue: Magenta

- Unadjusted without issue: Green

- Unadjusted with issue: Red

The zoom level can be manually adjusted with the control in the top left of the dialog.

The layer control in the top right of the dialog can be used to switch between the “ESRI Street Map” and “ESRI World Imagery” background map layers.

The bottom left corner of the dialog contains a legend for the possible levee check status colors.

Current Location in Toolbox

ADCIRC|Check/Fix Levee Ground Elevations

Related Tools

Coverages Tools

Arcs to Polygons

The Arcs to Polygons tool converts all arcs in a coverage to polygons based on specified parameters.

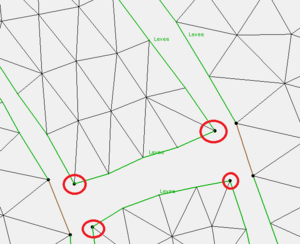

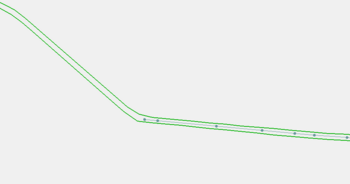

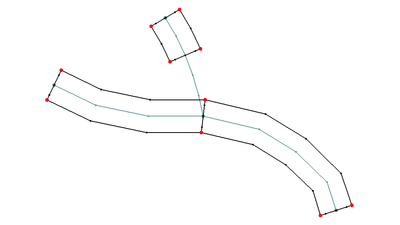

This tool is designed to simplify the creation of linear polygons to represent features such as channels or embankments. The feature extraction operations create stream or ridge networks. These features can be used to position cells/elements in a mesh/UGrid to honor these features. However, it is often useful to represent such linear features as Patch polygons to allow or anisotropic cells—elongated in the direction of the feature.

Each arc in the input polygon will be converted to a polygon. The following applies during the creating process:

- The polygon for isolated lines/arcs will consist of offset lines in both directions from the arc. The ends of the polygon will be perpendicular to the end segment of the arc.

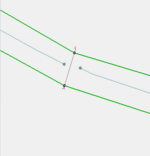

- If two arcs are connected (end to end), the orientation of the two polygons will be averaged so that the polygons share and "end".

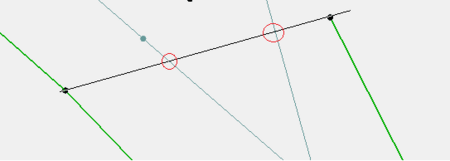

- If three arcs/lines join at a node, the two that have the most similar direction will be maintained as a continuous feature. The third will be trimmed back to not encroach on the polygons of the other two. If more than three arcs/lines join at a single location, the two arcs with the most similar direction will define the preserved direction. All other arcs will be trimmed back to not encroach.

- If a single arc closes in a loop, the resulting polygon closes on itself. This polygon will not function as a patch in SMS. This workflow is not recommended.

- The tool performs a check to determine if the polygons from two unconnected overlap/intersect each other. This is reported in the progress dialog.

Input Parameters

- Input coverage – This can be any map coverage in the project. The arcs in the coverage will be used to guide polygon creation.

- Average element/cell width – This defines the average width of the segments projecting perpendicular from the arc. The units (foot/meter) correspond to the display projection of SMS.

- Number of elements/cells (must be even) – This defines the number of segments projecting in each direction from the centerline. Because it is projected in both directions, it must be even.

- Bias (0.01-100.0) – This provides control of the relative length of the segments across the feature. In this case, it is actually a double bias because both sides of the feature are biased from the outer edges to the center. Therefore, a bias less than 1.0 results in segments that are shorter at the center. A bias greater than 1.0 results in segments that are longer at the center. Specify a small bias to improve representation of the channel bottom or embankment crest and a larger bias to increase resolution (representation) or the outer edge (shoulder or toes) of the feature. See images below for examples.

Output Parameters

- Output coverage – Specify the name of the coverage to be created that will contain the generated polygons. The intent of this coverage is to be incorporate into a mesh generation coverage for the domain. The resulting coverage will have the Area Property coverage and will need to be changed to the desired coverage type.

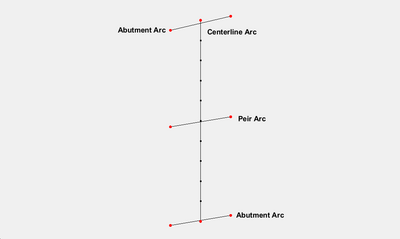

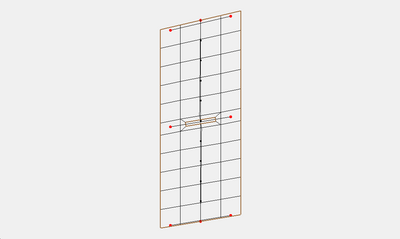

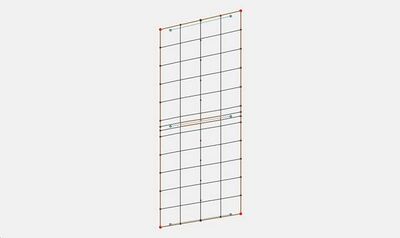

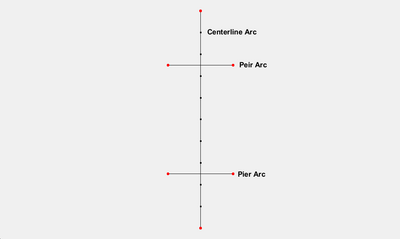

Current Location in Toolbox

Coverages/Arcs to Polygons

Examples

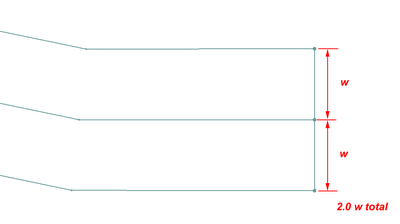

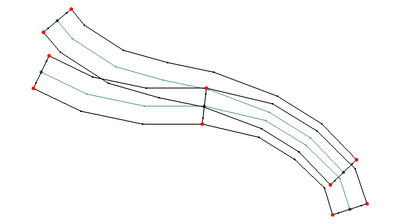

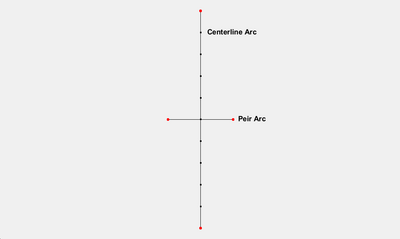

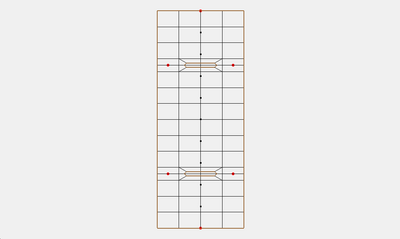

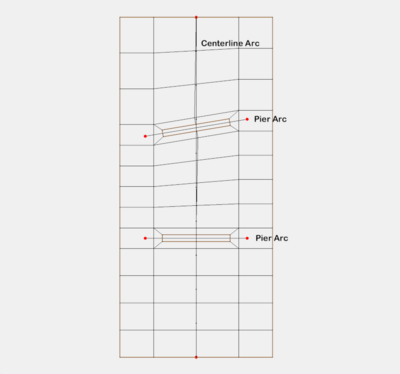

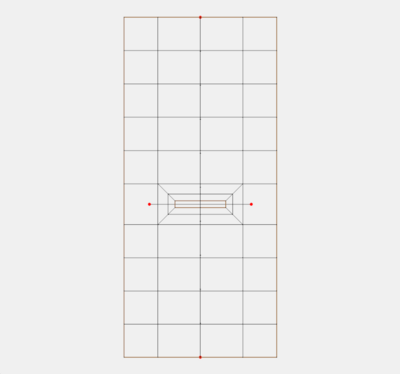

Example 1 – 2 segment wide channel

In this case the average element/cell width was set to "w". Since each half has one cell, both segments are "w". The total width is therefore "2.0 w". Since there are only 2 segments, the bias value has no impact in this case.

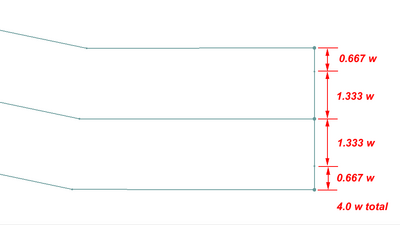

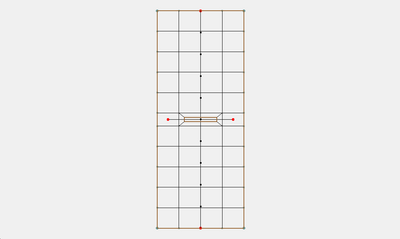

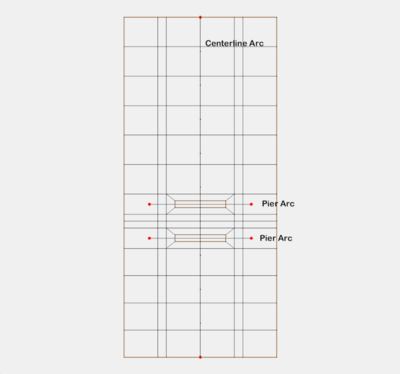

Example 2 – 4 segment wide channel with bias greater than 1.0

In this case with an average segment length (element/cell width) of "w" the total width is "4.0 w". Since the bias is 2.0, the center segments are twice as long as the outer segments.

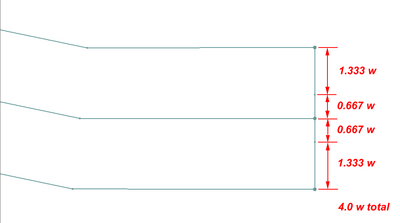

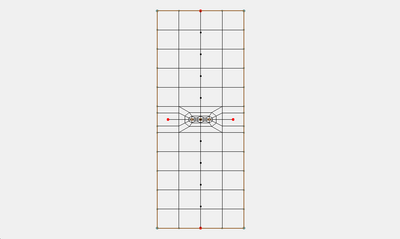

Example 3 – 4 segment wide channel with bias less than 1.0

In this case with an average segment length (element/cell width) of "w" the total width is "4.0 w". Since the bias is 0.5, the center segments are half as long as the outer segments.

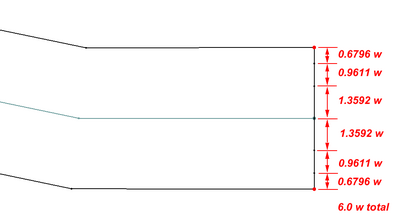

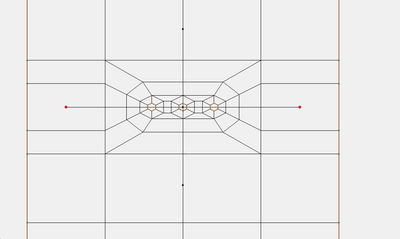

Example 4 – 6 segment wide channel with bias greater than 1.0

In this case with an average segment length (element/cell width) of "w" the total width is "6.0 w". Since the bias is 2.0, the center segments are twice as long as the outer segments.

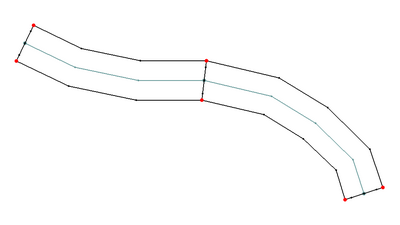

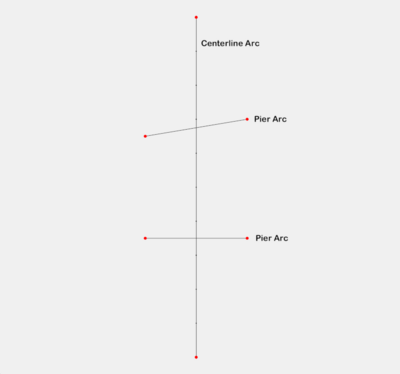

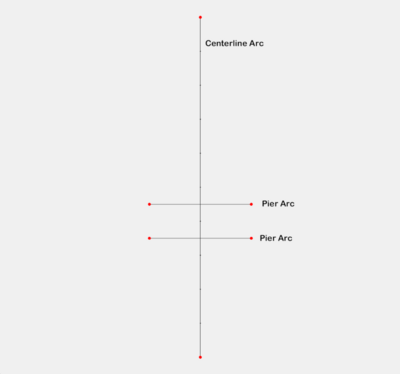

Example 5 – End to End Arcs to Polygons

The offset at the junction of the two arcs is adjusted to be perpendicular to the average of the two arcs. The two polygons would create a continuous channel.

Example 6 – Tributary Arcs to Polygons

At the junction of more than two arcs, the two that are closest to linear are assumed to be the main channel and the polygons for those two are treated just like example 5. The other arcs connected to this junction are treated as a tributary. The arc is still converted to a polygon, but any vertices on the arc within two widths of the junction are ignored to allow room for a transition between the channels to occur. (Note: it is anticipated that in the future this will be modified to allow for T or Y type merging of the polygons for junctions of three arcs.)

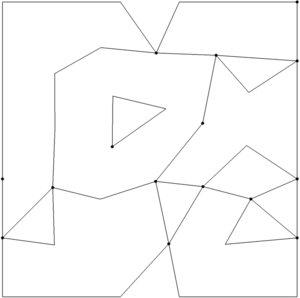

Example 7 – Parallel Arcs that Result in Overlapping Channel Polygons

If two arcs being converted to polygons result in overlapping polygons, the tool reports this issue using one of the overlapping arc indices. It is the responsibility of the modeler to adjust the arcs and convert to non-overlapping polygons, or clean up the overlapping polygons manually.

Related Tools

Trim Coverage

The Trim Coverage tool is used to remove features in a coverage that are not desired based on their location. The tool trims all arcs in a selected coverage to the polygons of another selected coverage. Arcs can be trimmed to preserve the portions of the arcs inside or outside of the trimming polygons. The user also specifies a buffer distance to allow the trimming to not retain the intersection points

A specific applications of this tool would be to trim extracted features that are outside of the desired simulation domain. Another application would be to clear out feature arcs that are inside the extents of a predominant feature such as a main river channel.

The tool allows for trimming data to features that are either inside or outside of the desired polygons.

For "large" polygons (> 2500 points), the polygon point locations will be smoothed prior to computing the polygon buffer. This is done because there are cases where the buffer distance combined with the polygon segment orientations causes the buffer operation to be very slow. Smoothing the locations prevents this slowdown.

Input Parameters

- Input coverage containing arcs to be trimmed – Select a coverage from the dropdown list. The arcs on this coverage will be trimmed.

- Input coverage containing polygons to trim by – Select a coverage from the dropdown list. The arcs on this coverage will be used to trim the arcs on the target coverage.

- Trimming option – Trim to inside or Trim to outside.

- "Trim to inside" – Trim to inside only keeps portions of the arcs that are inside of the polygons. .

- "Trim to outside" – Trim to outside only keeps the arc portions that are outside of the polygons.

- Trimming buffer distance – Enter a value that defines an offset (either inside if trimming to inside, or outside if trimming to outside) of the trimming polygons. This can be used to keep the results from being too close to the trimming polygon(s).

Output Parameters

- Output coverage – specify the name of the coverage to be created (representing the trimmed arcs).

Current Location in Toolbox

Coverages/Trim Coverage

Related Tools

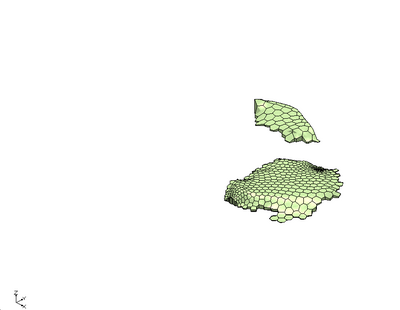

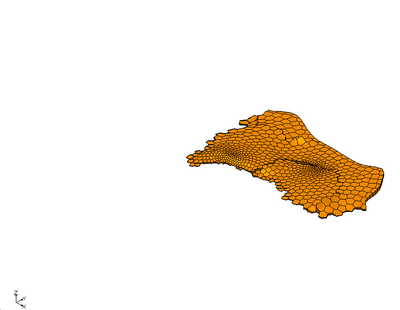

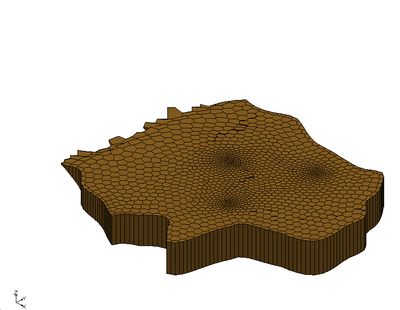

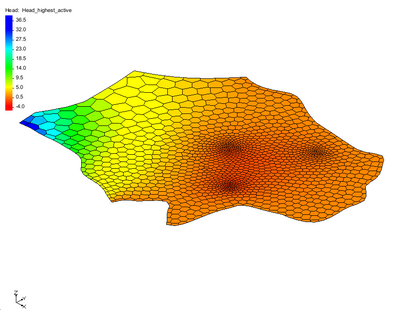

UGrid Boundary to Polygons

The UGrid Boundary to Polygons tool converts the outer boundary of a UGrid to polygons in a new coverage. This tool only functions on UGrids with 2D Cells.

Input parameters

- Input grid – The UGrid (or 2D mesh/scatter) whose boundary will be converted to arcs in a coverage.

Output parameters

- Output coverage name – Name of the coverage created by the tool. If this is left blank then the name of the coverage will be set to the name of the input grid.

Current location in Toolbox

Coverages/UGrid Boundary to Polygons

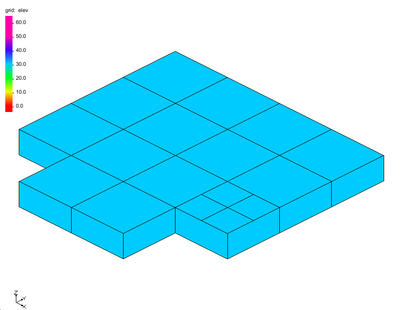

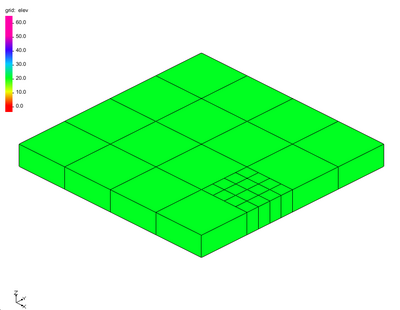

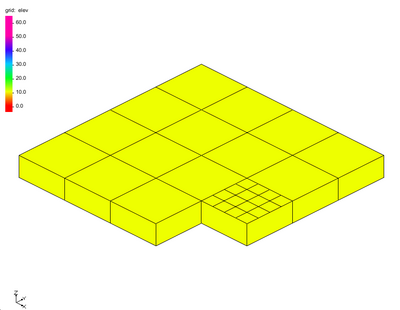

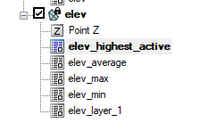

Example

Dataset Tools

Advective Courant Number

The Courant number is a spatially varied (dataset) dimensionless value representing the time a particle stays in a cell of a mesh/grid. This is based on the size of the element and the speed of that particle. A Courant number of 1.0 implies that a particle(parcel or drop of water) would take one time step to flow through the element. Since cells/elements are not guaranteed to align with the flow field, this number is an approximation. This dataset is computed at nodes so it uses the average size of the cells/elements attached to the node. (In the future we could have a cell based tool that computes the Courant number for the cell, but this is still an approximate number based on the direction.)

The advective courant number makes use of a velocity dataset that represents the velocity magnitude field on the desired geometry. The tool computes the Courant number at each node in the selected geometry based on the specified time step.

If the input velocity magnitude dataset is transient, the resulting dataset will also be transient.

For numerical solvers that are Courant limited/controlled, any violation of the Courant condition, where the Courant number exceeds the allowable threshold could result in instability. Therefore, the maximum of the Courant number dataset gives an indication of the stability of this mesh for the specified time step parameter.

This tool is intended to assist with numerical engine stability, and possibly the selection of an appropriate time step size.

Input Parameters

- Input dataset – Specify which velocity dataset will be used to represent particle velocity magnitude.

- Use timestep – Enter the computational time step value.

Output Parameters

- Advective courant number dataset – Enter the name for the new dataset which will represent the Courant number. (Suggestion: specify a name that references the input. Typically this would include the time step used in the calculation. The velocity dataset used could be referenced. The geometry is not necessary because the dataset resides on that geometry.)

Current Location in Toolbox

Datasets/Advective Courant Number

Related Tools

Advective Time Step

The time step tool is intended to assist in the selection of a time step for a numerical simulation that is based on the Courant number calculation. This tool can be thought of as the inverse of the Advective Courant Number tool. Refer to that documentation of the Adventive Courant Number tool for clarification. The objective of this tool is to compute the time step that would result in the specified Courant number for the given mesh and velocity field. The user would then select a time step for analysis that is at least as large as the maximum value in the resulting times step dataset. The tool computes the time step at each node in the selected geometry

If the input velocity dataset is transient, the time step tool will create a transient dataset.

Typically, the Courant number specified for this computation is <= 1.0 for Courant limited solvers. Some solvers maintain stability for Courant numbers up to 2 or some solver specific threshold. Specifying a Courant number below the maximum threshold can increase stability since the computation is approximate.

Input Parameters

- Input dataset – Specify which velocity dataset will be used to represent particle velocity magnitude.

- Use courant number – Enter the threshold Courant value (or a number lower than the threshold for additional stability).

Output Parameters

- Advective time step dataset – Enter the name for the new dataset which will represent the maximum time step. (Suggestion: specify a name that references the input. Typically this would include the Courant number used in the calculation. The velocity dataset used could be referenced. The geometry is not necessary because the dataset resides on that geometry.)

Current Location in Toolbox

Datasets/Advective Time Step

Related Tools

Angle Convention

This tool creates a new scalar dataset that represents the direction component of a vector quantity from an existing representation of that vector direction. The tool converts between angle conventions, converting from the existing angle convention to another angle convention .

Definitions:

- The meteorological direction is defined as the direction FROM. The origin (0.0) indicates the direction is coming from North. It increases clockwise from North (viewed from above). This is most commonly used for wind direction.

- The oceanographic direction is defined as the direction TO. The origin (0.0) indicates the direction is going to the North. It increases clockwise (like a bearing) so 45 degrees indicates a direction heading towards the North East.

- The Cartesian direction is defined by the Cartesian coordinate axes as a direction TO. East, or the positive X axis, defines the zero direction. It increases in a counter clockwise direction or righthand rule. 45 degrees indicates a direction heading to the North East and 90 degrees indicates a direction heading to the North.

The tool has the following options:

Input Parameters

- Input scalar dataset – Select the scalar dataset that will be the input.

- Input angle convention – Select the type of angle convention used in the input scalar dataset.

- "Cartesian" – Specifies that the input dataset uses a Cartesian angle convention.

- "Meteorologic" – Specifies that the input dataset uses a Meteorological angle convention.

- "Oceanographic" – Specifies that the input dataset uses an Oceanographic angle convention.

Output Parameters

- Output angle convention – Select the angle convention for the new dataset.

- "Cartesian" – Sets that the output dataset will use a Cartesian angle convention.

- "Meteorologic" – Sets that the output dataset will use a Meteorological angle convention.

- "Oceanographic" – Sets that the output dataset will use an Oceanographic angle convention.

- Output dataset name – Enter the name for the new dataset.

Canopy Coefficient

The Canopy Coefficient tool computes a canopy coefficient dataset, consisting of a canopy coefficient for each node in the target grid from a landuse raster. The canopy coefficient dataset can also be thought of as an activity mask. The canopy coefficient terminology comes from the ADCIRC fort.13 nodal attribute of the same name, which allows the user to disable wind stress for nodes directly under heavily forested areas that have been flooded, like a swamp. In essence, the canopy shields portions of the mesh from the effect of wind. Grid nodes in the target grid that lie outside of the extends of the specified landuse raster are assigned a canopy coefficient of 0 (unprotected).

The tool is built to specifically support NLCD and C-CAP rasters, but can be applied with custom rasters as well. Tables containing the default parameter values for these raster types are included below.

Input Parameters

- Input landuse raster – This is a required input parameter. Specify which raster in the project to use when determining the canopy effect.

- Interpolation option – Select which of the following options will be used for interpolating the canopy coefficient.

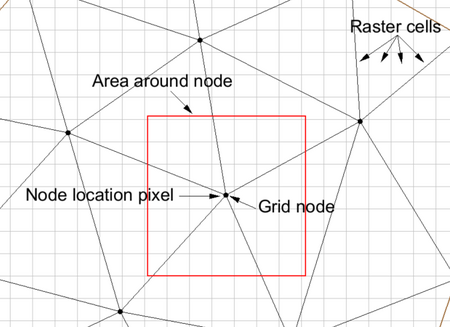

- "Node location" – For this option, the tool finds the landcover raster cell that contains each node in the grid and uses that single cell/pixel to determine the canopy coefficient. The canopy coefficient is set to 1 (active) if the landuse/vegetative classification associated with an pixel type is set to 1 (unprotected) and the coefficient is set to 0 (inactive) if the pixel is of a type that is protected.

- "Area around node" – When this option is selected, an input field for minimum percent blocked is enabled (described below). For this option, the tool computes the average length of the edges connected to the node being classified. The tool then computes the number of pixels in the area around the node that are protected and the number that are exposed. The node is marked as exposed or protected based on the minimum percent blocked parameter described below. For example, if a node is found to lie in pixel (100,200) of the raster, and the average length of the edges connected to the node is 5 pixels (computed based on the average length of the edge and the pixel size of the raster), then all pixels from (95,195) through (105,205) (100 pixels in all) are reviewed. If more than minimum perent blocked of those pixels are classified as protected, the node is classified as protected.

- Minimum percent blocked which ignores wind stress – Sets the minimum potential percent of the canopy that will be blocked and ignore the wind stress.

- Target grid – This is a required input parameter. Specify which grid/mesh the canopy coefficient dataset will be created for.

- Landuse raster type – This is a required parameter. Specify what type of landuse raster to use.

- "NLCD" – Sets the landuse raster type to National Land Cover Dataset (NLCD). A mapping table file for NLCD can be found here and down below.

- "C-CAP" – Sets the landuse raster type to Coastal Change Analysis Program (C-CAP). A mapping table file for C-CAP can be found here and down below.

- "Other" – Sets the landuse raster type to be set by the user. This adds an option to the dialog.

- Landuse to canopy coefficient mapping table – The Select File... button will allow a table file to be selected. The entire file name will be displayed in the text box to its right.

Output Parameters

- Output canopy coefficient dataset – Enter the name for the new canopy coefficient dataset.

Canopy Coefficient NLCD Mapping Table

| Code | Description | Canopy |

|---|---|---|

| 0 | Background | 0 |

| 1 | Unclassified | 0 |

| 11 | Open Water | 0 |

| 12 | Perennial Ice/Snow | 0 |

| 21 | Developed Open Space | 0 |

| 22 | Developed Low Intensity | 0 |

| 23 | Developed Medium Intensity | 0 |

| 24 | Developed High Intensity | 0 |

| 31 | Barren Land (Rock/Sand/Clay) | 0 |

| 41 | Deciduous Forest | 1 |

| 42 | Evergreen Forest | 1 |

| 43 | Mixed Forest | 1 |

| 51 | Dwarf Scrub | 0 |

| 52 | Shrub/Scrub | 0 |

| 71 | Grassland/Herbaceous | 0 |

| 72 | Sedge/Herbaceous | 0 |

| 73 | Lichens | 0 |

| 74 | Moss | 0 |

| 81 | Pasture/Hay | 0 |

| 82 | Cultivated Crops | 0 |

| 90 | Woody Wetlands | 1 |

| 95 | Emergent Herbaceous Wetlands | 0 |

| 91 | Palustrine Forested Wetland | 1 |

| 92 | Palustrine Scrub/Shrub Wetland | 1 |

| 93 | Estuarine Forested Wetland | 1 |

| 94 | Estuarine Scrub/Shrub Wetland | 0 |

| 96 | Palustrine Emergent Wetland (Persistent) | 0 |

| 97 | Estuarine Emergent Wetland | 0 |

| 98 | Palustrine Aquatic Bed | 0 |

| 99 | Estuarine Aquatic Bed | 0 |

Canopy Coefficient CCAP Mapping Table

| Code | Description | Canopy |

|---|---|---|

| 0 | Background | 0 |

| 1 | Unclassified | 0 |

| 2 | Developed High Intensity | 0 |

| 3 | Developed Medium Intensity | 0 |

| 4 | Developed Low Intensity | 0 |

| 5 | Developed Open Space | 0 |

| 6 | Cultivated Crops | 0 |

| 7 | Pasture/Hay | 0 |

| 8 | Grassland/Herbaceous | 0 |

| 9 | Deciduous Forest | 1 |

| 10 | Evergreen Forest | 1 |

| 11 | Mixed Forest | 1 |

| 12 | Scrub/Shrub | 0 |

| 13 | Palustrine Forested Wetland | 1 |

| 14 | Palustrine Scrub/Shrub Wetland | 1 |

| 15 | Palustrine Emergent Wetland (Persistent) | 0 |

| 16 | Estuarine Forested Wetland | 1 |

| 17 | Estuarine Scrub/Shrub Wetland | 0 |

| 18 | Estuarine Emergent Wetland | 0 |

| 19 | Unconsolidated Shore | 0 |

| 20 | Barren Land | 0 |

| 21 | Open Water | 0 |

| 22 | Palustrine Aquatic Bed | 0 |

| 23 | Estuarine Aquatic Bed | 0 |

| 24 | Perennial Ice/Snow | 0 |

| 25 | Tundra | 0 |

Current Location in Toolbox

Datasets/Canopy Coefficient

Related Tools

Chezy Friction

The Chezy Friction tool creates a new scalar dataset that represents the spatially varying Chezy friction coefficient at the sea floor. The tool is built to specifically support NLCD and C-CAP rasters with built in mapping values, but can be applied with custom rasters or custom mapping as well. Tables containing the default parameter values for these raster types are included below.

The tool combines values from the pixels of the raster object specified as a parameter for the tool. For each node in the geometry, the "area of influence" is computed for the node. The area of influence is a square with the node at the centroid of the square. The size of the square is the average length of the edges connected to the node in the target grid. All of the raster values within the area of influence are extracted from the specified raster object. A composite Chezy friction value is computed taking a weighted average of all the pixel values. I a node lies outside of the extents of the specified raster object, the default value is used as the Chezy friction coefficient at the node.

Input Parameters

- Input landuse raster – This is a required input parameter. Specify which raster in the project to use when determining the Chezy friction coefficients.

- Landuse raster type – This is a required parameter. Specify what type of landuse raster to use.

- "NLCD" – Sets the landuse raster type to National Land Cover Dataset (NLCD). A mapping table file for NLCD can be found here and down below.

- "C-CAP" – Sets the landuse raster type to Coastal Change Analysis Program (C-CAP). A mapping table file for C-CAP can be found here and down below.

- "Other" – Sets the landuse raster type to be set by the user. This adds an option to the dialog.

- Landuse to Chezy friction mapping table – The Select File... button will allow a table file to be selected. The entire file name will be displayed in the text box to its right.

- Target grid – This is a required input parameter. Specify which grid/mesh the canopy coefficient dataset will be created for.

- Default Chezy friction option – Set the default value to use for the Chezy friction coefficient for nodes not lying inside the specified raster object. This can be set to "Constant" to use a constant value or set "Dataset" to select a dataset to use.

- Default Chezy friction value – Enter the constant value to use as a default value.

- Default Chezy friction dataset – Select a dataset to use as a default value.

- Subset mask dataset – This optional option allows using a dataset as a subset mask. Nodes not marked as active in this dataset are assigned the default value.

Output Parameters

- Output Chezy friction dataset – Enter the name for the new Chezy friction dataset.

If the landuse type is chosen as NLCD or C-CAP, the following default values below are used in the calculation. If there are different landuse raster types, or wishing to use values that differ from the defaults, specify the raster type as Custom and provide in CSV file with the desired values.

Chezy Friction NLCD Mapping Table

| Code | Description | Friction |

|---|---|---|

| 0 | Background | 60 |

| 1 | Unclassified | 60 |

| 11 | Open Water | 110 |

| 12 | Perennial Ice/Snow | 220 |

| 21 | Developed Open Space | 110 |

| 22 | Developed Low Intensity | 44 |

| 23 | Developed Medium Intensity | 22 |

| 24 | Developed High Intensity | 15 |

| 31 | Barren Land (Rock/Sand/Clay) | 24 |

| 41 | Deciduous Forest | 22 |

| 42 | Evergreen Forest | 20 |

| 43 | Mixed Forest | 22 |

| 51 | Dwarf Scrub | 55 |

| 52 | Shrub/Scrub | 44 |

| 71 | Grassland/Herbaceous | 64 |

| 72 | Sedge/Herbaceous | 73 |

| 73 | Lichens | 81 |

| 74 | Moss | 87 |

| 81 | Pasture/Hay | 66 |

| 82 | Cultivated Crops | 59 |

| 90 | Woody Wetlands | 22 |

| 95 | Emergent Herbaceous Wetlands | 48 |

| 91 | Palustrine Forested Wetland | 22 |

| 92 | Palustrine Scrub/Shrub Wetland | 45 |

| 93 | Estuarine Forested Wetland | 22 |

| 94 | Estuarine Scrub/Shrub Wetland | 45 |

| 96 | Palustrine Emergent Wetland (Persistent) | 48 |

| 97 | Estuarine Emergent Wetland | 48 |

| 98 | Palustrine Aquatic Bed | 150 |

| 99 | Estuarine Aquatic Bed | 150 |

Chezy Friction CCAP Mapping Table

| Code | Description | Friction |

|---|---|---|

| 0 | Background | 60 |

| 1 | Unclassified | 60 |

| 2 | Developed High Intensity | 15 |

| 3 | Developed Medium Intensity | 22 |

| 4 | Developed Low Intensity | 44 |

| 5 | Developed Open Space | 110 |

| 6 | Cultivated Crops | 59 |

| 7 | Pasture/Hay | 66 |

| 8 | Grassland/Herbaceous | 64 |

| 9 | Deciduous Forest | 22 |

| 10 | Evergreen Forest | 20 |

| 11 | Mixed Forest | 22 |

| 12 | Scrub/Shrub | 44 |

| 13 | Palustrine Forested Wetland | 22 |

| 14 | Palustrine Scrub/Shrub Wetland | 45 |

| 15 | Palustrine Emergent Wetland (Persistent) | 48 |

| 16 | Estuarine Forested Wetland | 22 |

| 17 | Estuarine Scrub/Shrub Wetland | 45 |

| 18 | Estuarine Emergent Wetland | 48 |

| 19 | Unconsolidated Shore | 60 |

| 20 | Barren Land | 24 |

| 21 | Open Water | 110 |

| 22 | Palustrine Aquatic Bed | 150 |

| 23 | Estuarine Aquatic Bed | 150 |

| 24 | Perennial Ice/Snow | 220 |

| 25 | Tundra | 60 |

Current Location in Toolbox

Datasets/Chezy Friction

Related Tools

Compare Datasets

The Compare Datasets tool creates a new dataset that represents the difference between two specified datasets. The order of operations is "Dataset 1" - "Dataset 2". This tool is often used to evaluate the impact of making a change in a simulation such as restricting flow to a limited floodway, or changing the roughness values.

This tool differs from a straight difference using the dataset calculator because it does not require that the datasets be on the same geometry. If the second dataset is on a different geometry, it will be linearly interpolated to the geometry of the first dataset. The tool also assigns values to active areas of the datasets that are unique to one dataset or the other to identify these.

Input Parameters

- Dataset 1 – Select the first dataset to compare.

- Dataset 2 – Select the second dataset to compare. This has the same options available as Dataset 1 for dealing with inactive values.

- Inactive values option – From the drop-down menu, select the desired approach for handling inactive values in the dataset.

- "Use specified value for inactive value" – A specified value will replace inactive values.

- "Inactive values result in an inactive value" – Inactive values will be left as inactive in the comparison dataset.

- Specified value dataset 1 – Enter a value that will be used for inactive values for dataset 1.

- Specified value dataset 2 – Enter a value that will be used for inactive values for dataset 2.

Output Parameters

- Output dataset name – Enter the name of the new comparison dataset.

Current Location in toolbox

Datasets/Compare Datasets

Related Tools

Directional Roughness

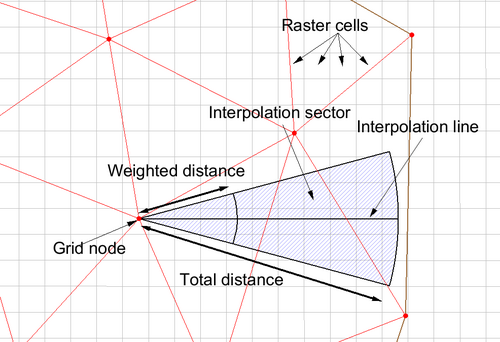

The Landuse Raster to Directional Roughness tool creates 12 scalar datasets. Each represents the reduction in the wind force coming from a specific direction. The directions represent a 30 degree wedge of full plane. As noted in the ADCIRC users manual, the first of these 12 directions corresponds to wind reduction for wind blowing to the east (E), or coming from the west. So this reflects the reduction of wind force caused by vegetation to the west of the grid point. The second direction is for wind blowing to the ENE. The directions continue in CCW order around the point (NNE, N, NNW, WNW, W, WSW, SSW, S, SSE, ESE).

The tool computes each of these 12 scalar values for each node in the grid based on a provided land use map. The NLCD and C-CAP land cover formats are encoded as displayed in the following tables. Custom tables can be applied using the Other option described below and providing a mapping file. The pixel values are combined using a weighting function. The weight of each pixel included in the sampling is based on the pixel distance (d) from the node point. The pixel weight is computed as d^2/(2 * dw^2). The term dw is defined below as well.

Input Parameters

- Input landuse raster – Select which landuse raster in the project will be the input.

- Method – Select which interpolation method will be used for computation of the conversion.

- "Linear" – Computations will combine pixel values along a line down the center of the directional wedge.

- "Sector" – Computations will combine pixel values for all pixels included in the sector for the direction.

- Total distance – Defines the extent of the line or sector away from the node point. This is measured in units of the display projection.

- Weighted distance – Defines the distance from the node point of maximum influence on the directional roughness (dw).

- Target grid – Select the target grid for which the datasets will be computed.

- Landuse raster type – Select which type the landuse raster is.

- "NLCD" – Sets the landuse raster type to National Land Cover Dataset (NLCD). A mapping table file for NLCD can be found here and down below.

- "C-CAP" – Sets the landuse raster type to Coastal Change Analysis Program (C-CAP). A mapping table file for C-CAP can be found here and down below.

- "Other" – Sets the landuse raster type to be set by the user. This selection adds an option to the dialog to select a mapping table (csv file).

- Landuse to directional roughness mapping table – The Select File... button allows a table file to be selected. Its full file name will appear on the box to its right.

- Default wind reduction value – Set the default level of wind reduction for the new dataset. This is assigned for all 12 datasets for mesh nodes not inside the landuse raster.

Output Parameters

- Output wind reduction dataset – Enter the name for the new wind reduction dataset

Directional Roughness NLCD Mapping Table

| Code | Description | Roughness |

|---|---|---|

| 0 | Background | 0 |

| 1 | Unclassified | 0 |

| 11 | Open Water | 0.001 |

| 12 | Perennial Ice/Snow | 0.012 |

| 21 | Developed Open Space | 0.1 |

| 22 | Developed Low Intensity | 0.3 |

| 23 | Developed Medium Intensity | 0.4 |

| 24 | Developed High Intensity | 0.55 |

| 31 | Barren Land (Rock/Sand/Clay) | 0.04 |

| 41 | Deciduous Forest | 0.65 |

| 42 | Evergreen Forest | 0.72 |

| 43 | Mixed Forest | 0.71 |

| 51 | Dwarf Scrub | 0.1 |

| 52 | Shrub/Scrub | 0.12 |

| 71 | Grassland/Herbaceous | 0.04 |

| 72 | Sedge/Herbaceous | 0.03 |

| 73 | Lichens | 0.025 |

| 74 | Moss | 0.02 |

| 81 | Pasture/Hay | 0.06 |

| 82 | Cultivated Crops | 0.06 |

| 90 | Woody Wetlands | 0.55 |

| 95 | Emergent Herbaceous Wetlands | 0.11 |

| 91 | Palustrine Forested Wetland | 0.55 |

| 92 | Palustrine Scrub/Shrub Wetland | 0.12 |

| 93 | Estuarine Forested Wetland | 0.55 |

| 94 | Estuarine Scrub/Shrub Wetland | 0.12 |

| 96 | Palustrine Emergent Wetland (Persistent) | 0.11 |

| 97 | Estuarine Emergent Wetland | 0.11 |

| 98 | Palustrine Aquatic Bed | 0.03 |

| 99 | Estuarine Aquatic Bed | 0.03 |

Directional Roughness CCAP Mapping Table

| Code | Description | Roughness |

|---|---|---|

| 0 | Background | 0 |

| 1 | Unclassified | 0 |

| 2 | Developed High Intensity | 0.55 |

| 3 | Developed Medium Intensity | 0.4 |

| 4 | Developed Low Intensity | 0.3 |

| 5 | Developed Open Space | 0.1 |

| 6 | Cultivated Crops | 0.06 |

| 7 | Pasture/Hay | 0.06 |

| 8 | Grassland/Herbaceous | 0.04 |

| 9 | Deciduous Forest | 0.65 |

| 10 | Evergreen Forest | 0.72 |

| 11 | Mixed Forest | 0.71 |

| 12 | Scrub/Shrub | 0.12 |

| 13 | Palustrine Forested Wetland | 0.55 |

| 14 | Palustrine Scrub/Shrub Wetland | 0.12 |

| 15 | Palustrine Emergent Wetland (Persistent) | 0.11 |

| 16 | Estuarine Forested Wetland | 0.55 |

| 17 | Estuarine Scrub/Shrub Wetland | 0.12 |

| 18 | Estuarine Emergent Wetland | 0.11 |

| 19 | Unconsolidated Shore | 0.03 |

| 20 | Barren Land | 0.04 |

| 21 | Open Water | 0.001 |

| 22 | Palustrine Aquatic Bed | 0.03 |

| 23 | Estuarine Aquatic Bed | 0.03 |

| 24 | Perennial Ice/Snow | 0.012 |

| 25 | Tundra | 0.03 |

Current Location in Toolbox

Datasets/Directional Roughness

Related Tools

Filter Dataset Values

This tool allows creating a new dataset based on specified filtering criteria. The filtering is applied in the order specified. This means as soon as the new dataset passes a test, it will not be filtered by subsequent tests. The tool has the following options:

Input Parameters

- Input dataset – Select which dataset in the project will be filtered to create the new dataset.

- If condition – select the filtering criteria that will be used. Options include:

- < (less than)

- <= (less than or equal to)

- > (greater than)

- >= (greater than or equal to)

- equal

- not equal

- null

- not null

- Assign on true – If the value passes the specified filter, the following can be assigned:

- original (no change)

- specify (a user specified value)

- null (the dataset null value)

- true (1.0)

- false (0.0)

- time – The first time the condition was met. Time can be specified in seconds, minutes, hours or days, and includes fractional values (such as 3.27 hours).

- Assign on false – If the value passes none of the criteria, a default value can be assigned as per follows:

- original (no change)

- specify (a user specified value)

- null (the dataset null value)

- true (1.0)

- false (0.0)

Output Parameters

- Output dataset – Enter the name for the filtered dataset

Geometry Gradient

This tool computes geometry gradient datasets. The tool has the following options:

Input Parameters

- Input dataset – Select the dataset to use in the gradient computations.

- Gradient vector – Select to create a gradient vector dataset.

- Gradient magnitude – Select to create a gradient magnitude dataset. The gradient is calculated as the run divided by the rise.

- Gradient direction – Select to create a gradient direction dataset. Gives the direction in degrees of the maximum gradient at each point

Output Parameters

- Output gradient vector dataset name – Enter the name for the new gradient vector dataset.

- Output gradient magnitude dataset name – Enter the name for the new gradient magnitude dataset.

- Output gradient direction dataset name – Enter the name for the new gradient direction dataset.

Gravity Waves Courant Number

The Courant number tool is intended to assist in the selection of a time step for a numerical simulation or evaluation of stability of the simulation. This tool can be thought of as the inverse of the Gravity Waves Time Step tool. The Courant number is a spatially varied dimensionless value (dataset) representing the time a particle stays in a cell of a mesh/grid. This is based on the size of the element and the speed of that particle. A Courant number of 1.0 implies that a particle(parcel or drop of water) would take one time step to flow through the element. Since cells/elements are not guaranteed to align with the flow field, this number is an approximation. This dataset is computed at nodes so it uses the average size of the cells/elements attached to the node. The size is computed in meters if the geometry (mesh / UGrid / ...) is in geographic space.

A Courant number calculation requires as an input a velocity as shown in the equation below:

-

- Time Step: a user specified desired timestep size

- Velocity: speed (celerity) of a gravity wave traveling through the medium

- NodalSpacing: Average length of the edges connected to each node of the candidate grid/mesh (converted to meters if working in geographic coordinates)

This tool approximates velocity based on the speed of a gravity wave through the water column, which is dependent on the depth of the water column. For purposes of this tool, the speed is assumed to be:

- Note: the variable in the equation above is "Depth", not "Elevation". For this tool, the input dataset can be the elevation or a computed depth. If an elevation is used, the sign is inverted because SMS convention is elevation up.

Since the equation for velocity requires a positive value for depth, the depth is forced to be at least 0.1 for the calculation. If the input dataset is elevation, the depth used for computation of the time step is:

If the input dataset is depth, the depth used for computation of the time step is:

This is assuming that the water level is approximately 0.0 (Mean Sea Level).

If the water level is not relatively flat or not near 0.0, the best practice approach would be to compute a depth and use that dataset directly. Depth can be computed as:

-

- Eta: water surface elevation (usually computed by a hydrodynamic model).

In SMS, both of these are measured from a common datum with positive upwards.

The tool computes the Courant number at each node in the selected geometry based on the specified time step.

If the input velocity magnitude dataset is transient, the resulting Courant Number dataset will also be transient.

For numerical solvers that are Courant limited/controlled, any violation of the Courant condition, where the Courant number exceeds the allowable threshold could result in instability. Therefore, the maximum of the Courant number dataset gives an indication of the stability of this mesh for the specified time step parameter.

This tool is intended to assist with numerical engine stability, and possibly the selection of an appropriate time step size.

The Gravity Waves Courant Number tool dialog has the following options:

Input Parameters

- Input dataset – Specify the elevation dataset (or depth dataset if using depth option).

- Input dataset is depth – Specify if the input dataset is depth.

- Gravity – Enter the gravity value in m/s². Default is 9.80665.

- Use time step – Enter the computational time step value in seconds. Default is 1.0.

Output Parameters

- Gravity waves courant number data set – Enter the name for the new gravity wave Courant number dataset. It is recommended to specify a name that references the input. Typically this would include the time step used in the calculation. The velocity dataset used could be referenced. The geometry is not necessary because the dataset resides on that geometry.

Related Topics

Gravity Waves Time Step

The time step tool is intended to assist in the selection of a time step for a numerical simulation that is based on the Courant limited calculations. This tool can be thought of as the inverse of the Gravity Courant Number tool. Refer to that documentation of the Gravity Courant Number tool for clarification. The objective of this tool is to compute the time step that would result in the specified Courant number for the given mesh (at each node or location in the mesh). The user would then select a time step for analysis that is smaller than the minimum value in the resulting times step dataset. (I.E. the minimum timestep for any node in the mesh controls the computation time step for the simulation.)

The equation used to compute the timestep is shown below:

-

- Time Step: the value computed at each location in the mesh/grid.

- Courant Number: user specified numerical limiting value

- Velocity: speed (celerity) of a gravity wave traveling through the medium

- NodalSpacing: Average length of the edges connected to each node of the candidate grid/mesh (converted to meters if working in geographic coordinates)

Typically, the Courant number specified for this computation is <= 1.0 for Courant limited solvers. Some solvers maintain stability for Courant numbers up to 2 or some solver specific threshold. Specifying a Courant number below the maximum threshold can increase stability since the computation is approximate.

This tool approximates velocity based on the speed of a gravity wave through the water column. Which is dependent on the depth of the water column. For purposes of this tool, the speed is assumed to be:

- Note: the variable in the equation above is "Depth", not "Elevation". For this tool, the input dataset can be the elevation or a computed depth. If an elevation is used, the sign is inverted because SMS convention is elevation up.

Since the equation for velocity requires a positive value for depth, the depth is forced to be at least 0.1 for the calculation. If the input dataset is elevation, the depth used for computation of the time step is:

If the input dataset is depth, the depth used for computation of the time step is:

This is assuming that the water level is approximately 0.0 (Mean Sea Level).

If the water level is not relatively flat or not near 0.0, the best practice approach would be to compute a depth and use that dataset directly. Depth can be computed as:

-

- Eta: water surface elevation (usually computed by a hydrodynamic model).

In SMS, both of these are measured from a common datum with positive upwards.

The tool computes the time step at each node in the selected geometry based on the specified Courant Number.

If the input velocity magnitude dataset is transient, the resulting Time step dataset will also be transient.

The Gravity Waves Time Step tool dialog has the following options:

Input Parameters

- Input dataset – Specify the elevation dataset (or depth dataset if using depth option).

- Input dataset is depth – Specify Specify if the input dataset is depth.

- Gravity – Enter the gravity value in m/s². Default is 9.80665.

- Use courant number – Enter the Courant number. Default is 1.0.

Output Parameters

- Gravity waves time step dataset – Enter the name for the new gravity wave time step dataset. It is recommended to specify a name that references the input. Typically this would include the Courant number used in the calculation. The velocity dataset used could be referenced. The geometry is not necessary because the dataset resides on that geometry.

Related Topics

Landuse Raster to Mannings N

The Landuse Raster to Mannings N tool is used to populate the spatial attributes for the Mannin's N roughness coefficient at the sea flow. A new dataset is created from NLCD, C-CAP, or other land use raster.

The tool will combine values from the pixels of the raster object specified as a parameter for the tool. For each node in the geometry, the "area of influence" is computed for the node. The area of influence is a square with the node at the center of the square. The size of the square is the average length of the edges connected to the node in the target grid. All of the raster values within the area of influence are extracted from the specified raster object. A composite Manning's N roughness value is computed taking a weighted average of all the pixel values. If a node lies outside of the extents of the specified raster object, the default value is used as the Manning's N roughness value at the node.

The tool has the following options:

Input Parameters

- Input landuse raster – This is a required input parameter. Specify which raster in the project to use when determining the Manning's N roughness values.

- Landuse raster type – This is a required parameter. Specify what type of landuse raster to use.

- "NLCD" – Sets the landuse raster type to National Land Cover Dataset (NLCD). A mapping table file for NLCD can be found here and down below.

- "C-CAP" – Sets the landuse raster type to Coastal Change Analysis Program (C-CAP). A mapping table file forC-CAP can be found here and down below.

- "Other" – Sets the landuse raster type to use a table of values provided by the user.

- Target grid – This is a required input parameter. Specify which grid/mesh the Manning's N roughness dataset will be created for.

- Landuse to Mannings N mapping table The Select File... button allows a table file to be selected for the "Other" landuse raster type. Its full file name will appear on the box to its right. This must be a CSV file with the following columns of data Code, Description, Manning's N. See the example NLCD and CCAP tables below.

- Default Mannings N option – Set the default value to use for the Manning's N roughness values for nodes not lying inside the specified raster object. This can be set to "Constant" to use a constant value or set "Dataset" to select a dataset to use.

- "Constant" – Sets a constant value to be the default for Mannings N.

- Default Mannings N value – Sets a constant value to be the default for Mannings N.

- "Dataset" – Sets a dataset to be the default for Mannings N.

- Default Mannings N dataset – Select a dataset to be used as the default for Mannings N.

- "Constant" – Sets a constant value to be the default for Mannings N.

- Subset mask dataset (optional) – This optional option allows using a dataset as a subset mask. Nodes not marked as active in this dataset are assigned the default value.

Output Parameters

- Output Mannings N dataset – Enter the name for the new Mannings N dataset

If the landuse type is chosen as NLCD or C-CAP, the default values below are used in the calculation. If there are different landuse raster types, or wishing to use values that differ from the defaults, specify the raster type as Custom and provide in CSV file with the desired values.

Mannings Roughness NLCD Mapping Table

| Code | Description | Mannings |

|---|---|---|

| 0 | Background | 0.025 |

| 1 | Unclassified | 0.025 |

| 11 | Open Water | 0.02 |

| 12 | Perennial Ice/Snow | 0.01 |

| 21 | Developed Open Space | 0.02 |

| 22 | Developed Low Intensity | 0.05 |

| 23 | Developed Medium Intensity | 0.1 |

| 24 | Developed High Intensity | 0.15 |

| 31 | Barren Land (Rock/Sand/Clay) | 0.09 |

| 41 | Deciduous Forest | 0.1 |

| 42 | Evergreen Forest | 0.11 |

| 43 | Mixed Forest | 0.1 |

| 51 | Dwarf Scrub | 0.04 |

| 52 | Shrub/Scrub | 0.05 |

| 71 | Grassland/Herbaceous | 0.034 |

| 72 | Sedge/Herbaceous | 0.03 |

| 73 | Lichens | 0.027 |

| 74 | Moss | 0.025 |

| 81 | Pasture/Hay | 0.033 |

| 82 | Cultivated Crops | 0.037 |

| 90 | Woody Wetlands | 0.1 |

| 95 | Emergent Herbaceous Wetlands | 0.045 |

| 91 | Palustrine Forested Wetland | 0.1 |

| 92 | Palustrine Scrub/Shrub Wetland | 0.048 |

| 93 | Estuarine Forested Wetland | 0.1 |

| 94 | Estuarine Scrub/Shrub Wetland | 0.048 |

| 96 | Palustrine Emergent Wetland (Persistent) | 0.045 |

| 97 | Estuarine Emergent Wetland | 0.045 |

| 98 | Palustrine Aquatic Bed | 0.015 |

| 99 | Estuarine Aquatic Bed | 0.015 |

Mannings Roughness CCAP Mapping Table

| Code | Description | Roughness |

|---|---|---|

| 0 | Background | 0.025 |

| 1 | Unclassified | 0.025 |

| 2 | Developed High Intensity | 0.15 |

| 3 | Developed Medium Intensity | 0.1 |

| 4 | Developed Low Intensity | 0.05 |

| 5 | Developed Open Space | 0.02 |

| 6 | Cultivated Crops | 0.037 |

| 7 | Pasture/Hay | 0.033 |

| 8 | Grassland/Herbaceous | 0.034 |

| 9 | Deciduous Forest | 0.1 |

| 10 | Evergreen Forest | 0.11 |

| 11 | Mixed Forest | 0.1 |

| 12 | Scrub/Shrub | 0.05 |

| 13 | Palustrine Forested Wetland | 0.1 |

| 14 | Palustrine Scrub/Shrub Wetland | 0.048 |

| 15 | Palustrine Emergent Wetland (Persistent) | 0.045 |

| 16 | Estuarine Forested Wetland | 0.1 |

| 17 | Estuarine Scrub/Shrub Wetland | 0.048 |

| 18 | Estuarine Emergent Wetland | 0.045 |

| 19 | Unconsolidated Shore | 0.03 |

| 20 | Barren Land | 0.09 |

| 21 | Open Water | 0.02 |

| 22 | Palustrine Aquatic Bed | 0.015 |

| 23 | Estuarine Aquatic Bed | 0.015 |

| 24 | Perennial Ice/Snow | 0.01 |

| 25 | Tundra | 0.03 |

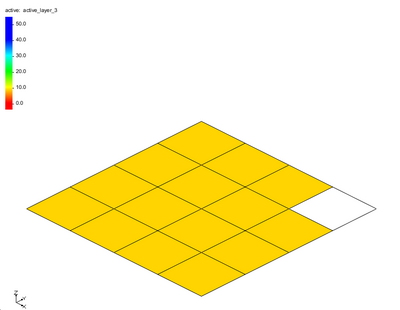

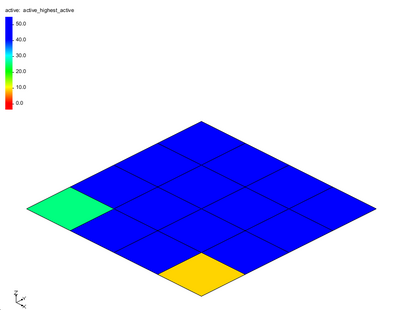

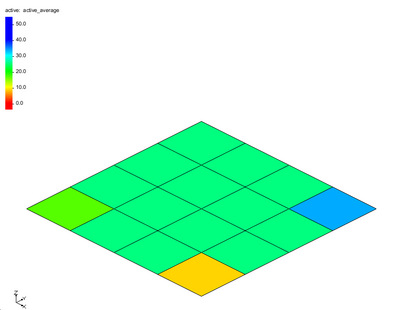

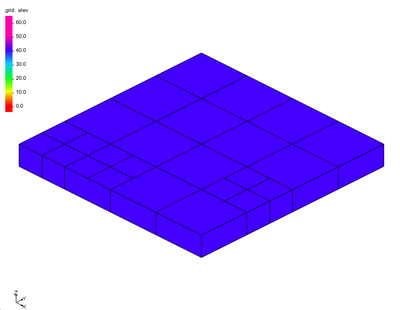

Map Activity

This tool builds a dataset with values copied from one dataset and activity mapped from another. The tool has the following options:

Input Parameters

- Value dataset– Select the dataset that will define the values for the new dataset.

- Activity dataset – Select the dataset that will define the activity for the new dataset.

Output Paramters

- Output dataset – Enter the name for the new activity dataset.

Current Location in Toolbox

Datasets/Map Activity

Merge Dataset

This tool takes two datasets that have non-overlapping timesteps and combines them into a single dataset. Currently the time steps of the first dataset should be before the time steps of the second dataset. This restriction may be removed. The two datasets should be on the same geometry. The time step values for the input datasets may be specified using the time tools. This is particularly necessary for datasets that represent a single point in time, but are steady state, so it may not be specified.

Input parameters

- Dataset one– Select the dataset that will define the first time steps in the output.

- Dataset two– Select the dataset whose time steps will be appended to the time steps from the first argument.

Output parameters

- Output dataset – Enter the name for the merged dataset

Related Tools

Point Spacing

The Point Spacing tool calculate the average length of the edges connected to a point in a mesh/UGrid or scatter set.

This operation is the inverse of the process of scalar paving in Mesh Generation and the result could be thought of as a size function (often referred to as nodal spacing functions).

The size function could then be smoothed to generate a size function for a mesh with improved mesh quality.

Input parameters

- Target grid – This is a required input parameter. Specify which grid/mesh the point spacing dataset will be created for.

Output parameters

- Output dataset – Enter the name for the point spacing dataset.

Current Location in toolbox

Datasets/Point Spacing

Related Tools

Primitive Weighting